Distilled version of Protein Bert (https://huggingface.co/Rostlab/prot_bert/tree/main) for teaching purpose (I strongly discourage you to use if for science)

Use the model

from transformers import BertTokenizer, AutoModelForMaskedLM

tokenizer = BertTokenizer.from_pretrained("Rostlab/prot_bert")

model = AutoModelForMaskedLM.from_pretrained("Agiottonini/ProtBertDistilled")

Loss Formulation:

Same as here: https://huggingface.co/littleworth/protgpt2-distilled-tiny

Soft Loss:

ℒsoft = KL(softmax(s/T), softmax(t/T)), where s are the logits from the student model, t are the logits from the teacher model, and T is the temperature used to soften the probabilities.

Hard Loss:

ℒhard = -∑i yi log(softmax(si)), where yi represents the true labels, and si are the logits from the student model corresponding to each label.

Combined Loss:

ℒ = α ℒhard + (1 - α) ℒsoft, where α (alpha) is the weight factor that balances the hard loss and soft loss.

Optimizer

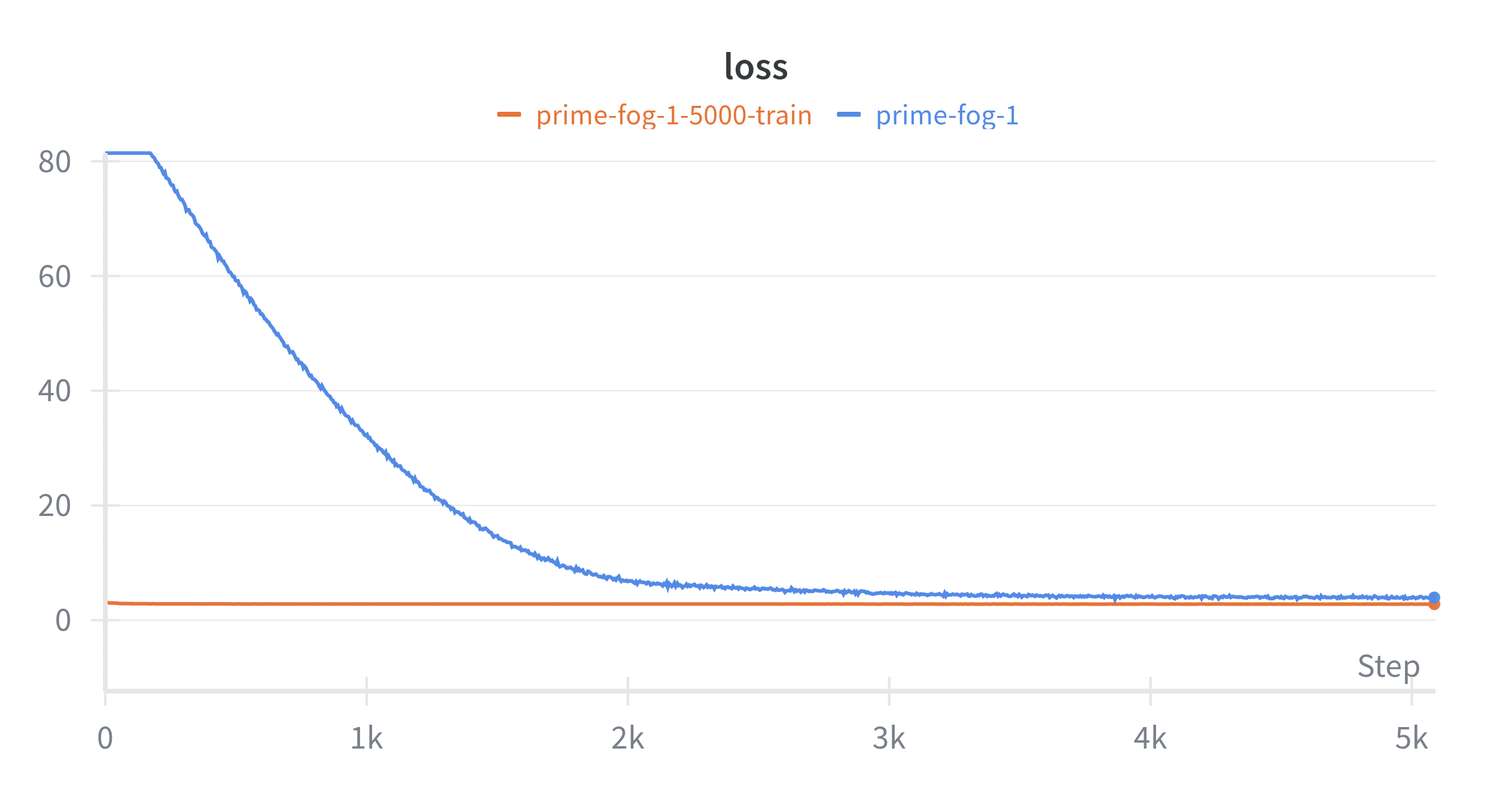

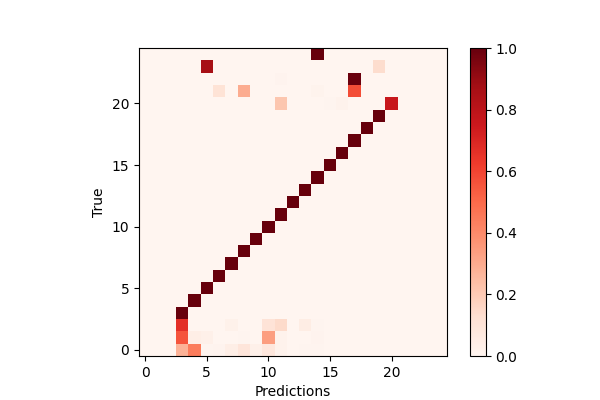

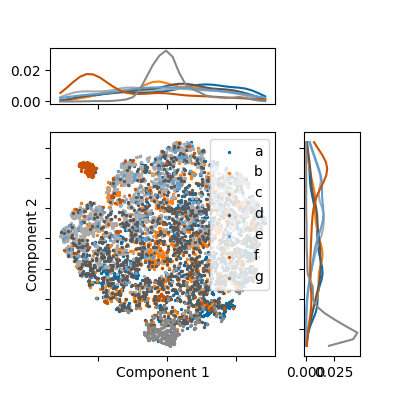

Some visualizations

Token prediction confusion matrix

SCOPe

Training script:

You can try creating your own model with this script

- Downloads last month

- 5

Model tree for AGiottonini/ProtBertDistilled

Base model

Rostlab/prot_bert