url

stringlengths 61

61

| repository_url

stringclasses 1

value | labels_url

stringlengths 75

75

| comments_url

stringlengths 70

70

| events_url

stringlengths 68

68

| html_url

stringlengths 51

51

| id

int64 1.14B

2.92B

| node_id

stringlengths 18

18

| number

int64 3.75k

7.46k

| title

stringlengths 1

290

| user

dict | labels

listlengths 0

4

| state

stringclasses 2

values | locked

bool 1

class | assignee

dict | assignees

listlengths 0

3

| milestone

dict | comments

listlengths 0

30

| created_at

timestamp[ms] | updated_at

timestamp[ms] | closed_at

timestamp[ms] | author_association

stringclasses 4

values | sub_issues_summary

dict | active_lock_reason

null | body

stringlengths 1

47.9k

⌀ | closed_by

dict | reactions

dict | timeline_url

stringlengths 70

70

| performed_via_github_app

null | state_reason

stringclasses 3

values | draft

null | pull_request

null | is_pull_request

bool 1

class |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

https://api.github.com/repos/huggingface/datasets/issues/7167

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/7167/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/7167/comments

|

https://api.github.com/repos/huggingface/datasets/issues/7167/events

|

https://github.com/huggingface/datasets/issues/7167

| 2,546,708,014

|

I_kwDODunzps6Xy64u

| 7,167

|

Error Mapping on sd3, sdxl and upcoming flux controlnet training scripts in diffusers

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/90132896?v=4",

"events_url": "https://api.github.com/users/Night1099/events{/privacy}",

"followers_url": "https://api.github.com/users/Night1099/followers",

"following_url": "https://api.github.com/users/Night1099/following{/other_user}",

"gists_url": "https://api.github.com/users/Night1099/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/Night1099",

"id": 90132896,

"login": "Night1099",

"node_id": "MDQ6VXNlcjkwMTMyODk2",

"organizations_url": "https://api.github.com/users/Night1099/orgs",

"received_events_url": "https://api.github.com/users/Night1099/received_events",

"repos_url": "https://api.github.com/users/Night1099/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/Night1099/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/Night1099/subscriptions",

"type": "User",

"url": "https://api.github.com/users/Night1099",

"user_view_type": "public"

}

|

[] |

closed

| false

| null |

[] | null |

[

"this is happening on large datasets, if anyone happens upon this i was able to fix by changing\r\n\r\n```\r\ntrain_dataset = train_dataset.map(compute_embeddings_fn, batched=True, new_fingerprint=new_fingerprint)\r\n```\r\n\r\nto\r\n\r\n```\r\ntrain_dataset = train_dataset.map(compute_embeddings_fn, batched=True, batch_size=16, new_fingerprint=new_fingerprint)\r\n```"

] | 2024-09-25T01:39:51

| 2024-09-30T05:28:15

| 2024-09-30T05:28:04

|

NONE

|

{

"completed": 0,

"percent_completed": 0,

"total": 0

}

| null |

### Describe the bug

```

Map: 6%|██████ | 8000/138120 [19:27<5:16:36, 6.85 examples/s]

Traceback (most recent call last):

File "/workspace/diffusers/examples/controlnet/train_controlnet_sd3.py", line 1416, in <module>

main(args)

File "/workspace/diffusers/examples/controlnet/train_controlnet_sd3.py", line 1132, in main

train_dataset = train_dataset.map(compute_embeddings_fn, batched=True, new_fingerprint=new_fingerprint)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/dist-packages/datasets/arrow_dataset.py", line 560, in wrapper

out: Union["Dataset", "DatasetDict"] = func(self, *args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/dist-packages/datasets/arrow_dataset.py", line 3035, in map

for rank, done, content in Dataset._map_single(**dataset_kwargs):

File "/usr/local/lib/python3.11/dist-packages/datasets/arrow_dataset.py", line 3461, in _map_single

writer.write_batch(batch)

File "/usr/local/lib/python3.11/dist-packages/datasets/arrow_writer.py", line 567, in write_batch

self.write_table(pa_table, writer_batch_size)

File "/usr/local/lib/python3.11/dist-packages/datasets/arrow_writer.py", line 579, in write_table

pa_table = pa_table.combine_chunks()

^^^^^^^^^^^^^^^^^^^^^^^^^

File "pyarrow/table.pxi", line 4387, in pyarrow.lib.Table.combine_chunks

File "pyarrow/error.pxi", line 155, in pyarrow.lib.pyarrow_internal_check_status

File "pyarrow/error.pxi", line 92, in pyarrow.lib.check_status

pyarrow.lib.ArrowInvalid: offset overflow while concatenating arrays

Traceback (most recent call last):

File "/usr/local/bin/accelerate", line 8, in <module>

sys.exit(main())

^^^^^^

File "/usr/local/lib/python3.11/dist-packages/accelerate/commands/accelerate_cli.py", line 48, in main

args.func(args)

File "/usr/local/lib/python3.11/dist-packages/accelerate/commands/launch.py", line 1174, in launch_command

simple_launcher(args)

File "/usr/local/lib/python3.11/dist-packages/accelerate/commands/launch.py", line 769, in simple_launcher

```

### Steps to reproduce the bug

The dataset has no problem training on sd1.5 controlnet train script

### Expected behavior

Script not randomly erroing with error above

### Environment info

- `datasets` version: 3.0.0

- Platform: Linux-6.5.0-44-generic-x86_64-with-glibc2.35

- Python version: 3.11.9

- `huggingface_hub` version: 0.25.1

- PyArrow version: 17.0.0

- Pandas version: 2.2.3

- `fsspec` version: 2024.6.1

training on A100

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/90132896?v=4",

"events_url": "https://api.github.com/users/Night1099/events{/privacy}",

"followers_url": "https://api.github.com/users/Night1099/followers",

"following_url": "https://api.github.com/users/Night1099/following{/other_user}",

"gists_url": "https://api.github.com/users/Night1099/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/Night1099",

"id": 90132896,

"login": "Night1099",

"node_id": "MDQ6VXNlcjkwMTMyODk2",

"organizations_url": "https://api.github.com/users/Night1099/orgs",

"received_events_url": "https://api.github.com/users/Night1099/received_events",

"repos_url": "https://api.github.com/users/Night1099/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/Night1099/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/Night1099/subscriptions",

"type": "User",

"url": "https://api.github.com/users/Night1099",

"user_view_type": "public"

}

|

{

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/7167/reactions"

}

|

https://api.github.com/repos/huggingface/datasets/issues/7167/timeline

| null |

completed

| null | null | false

|

https://api.github.com/repos/huggingface/datasets/issues/7164

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/7164/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/7164/comments

|

https://api.github.com/repos/huggingface/datasets/issues/7164/events

|

https://github.com/huggingface/datasets/issues/7164

| 2,544,757,297

|

I_kwDODunzps6Xreox

| 7,164

|

fsspec.exceptions.FSTimeoutError when downloading dataset

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/38216460?v=4",

"events_url": "https://api.github.com/users/timonmerk/events{/privacy}",

"followers_url": "https://api.github.com/users/timonmerk/followers",

"following_url": "https://api.github.com/users/timonmerk/following{/other_user}",

"gists_url": "https://api.github.com/users/timonmerk/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/timonmerk",

"id": 38216460,

"login": "timonmerk",

"node_id": "MDQ6VXNlcjM4MjE2NDYw",

"organizations_url": "https://api.github.com/users/timonmerk/orgs",

"received_events_url": "https://api.github.com/users/timonmerk/received_events",

"repos_url": "https://api.github.com/users/timonmerk/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/timonmerk/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/timonmerk/subscriptions",

"type": "User",

"url": "https://api.github.com/users/timonmerk",

"user_view_type": "public"

}

|

[] |

open

| false

| null |

[] | null |

[

"Hi ! If you check the dataset loading script [here](https://huggingface.co/datasets/openslr/librispeech_asr/blob/main/librispeech_asr.py) you'll see that it downloads the data from OpenSLR, and apparently their storage has timeout issues. It would be great to ultimately host the dataset on Hugging Face instead.\r\n\r\nIn the meantime I can only recommend to try again later :/",

"Ok, still many thanks!",

"I'm also getting this same error but for `CSTR-Edinburgh/vctk`, so I don't think it's the remote host that's timing out, since I also time out at exactly 5 minutes. It seems there is a universal fsspec timeout that's getting hit starting in v3.",

"in v3 we cleaned the download parts of the library to make it more robust for HF downloads and to simplify support of script-based datasets. As a side effect it's not the same code that is used for other hosts, maybe time out handling changed. Anyway it should be possible to tweak fsspec to use retries\r\n\r\nFor example using [aiohttp_retry](https://github.com/inyutin/aiohttp_retry) maybe (haven't tried) ?\r\n\r\n```python\r\nimport fsspec\r\nfrom aiohttp_retry import RetryClient\r\n\r\nfsspec.filesystem(\"http\")._session = RetryClient()\r\n```\r\n\r\nrelated topic : https://github.com/huggingface/datasets/issues/7175",

"Adding a timeout argument to the `fs.get_file` call in `fsspec_get` in `datasets/utils/file_utils.py` might fix this ([source code](https://github.com/huggingface/datasets/blob/65f6eb54aa0e8bb44cea35deea28e0e8fecc25b9/src/datasets/utils/file_utils.py#L330)):\r\n\r\n```python\r\nfs.get_file(path, temp_file.name, callback=callback, timeout=3600)\r\n```\r\n\r\nSetting `timeout=1` fails after about one second, so setting it to 3600 should give us 1h. Havn't really tested this though. I'm also not sure what implications this has and if it causes errors for other `fs` implementations/configurations.\r\n\r\nThis is using `datasets==3.0.1` and Python 3.11.6.\r\n\r\n---\r\n\r\nEdit: This doesn't seem to change the timeout time, but add a second timeout counter (probably in `fsspec/asyn.py/sync`). So one can reduce the time for downloading like this, but not expand.\r\n\r\n---\r\n\r\nEdit 2: `fs` is of type `fsspec.implementations.http.HTTPFileSystem` which initializes a `aiohttp.ClientSession` using `client_kwargs`. We can pass these when calling `load_dataset`.\r\n\r\n**TLDR; This fixes it:**\r\n\r\n```python\r\nimport datasets, aiohttp\r\ndataset = datasets.load_dataset(\r\n dataset_name,\r\n storage_options={'client_kwargs': {'timeout': aiohttp.ClientTimeout(total=3600)}}\r\n)\r\n```",

"I've handled the issue like this to ensure smoother downloads when using the `datasets` library. \nIf modifying the library is not too inconvenient, this approach could be a good (but tentative) solution.\n\n### Changes Made\n\nModified `datasets.utils.file_utils.fsspec_get` to handle storage options and set a timeout:\n\n```python\ndef fsspec_get(url, temp_file, storage_options=None, desc=None, disable_tqdm=False):\n\n # ---> [ADD]\n if storage_options is None:\n storage_options = {}\n if \"client_kwargs\" not in storage_options:\n storage_options[\"client_kwargs\"] = {}\n storage_options[\"client_kwargs\"][\"timeout\"] = aiohttp.ClientTimeout(total=3600)\n # <---\n\n # The rest of the original code remains unchanged"

] | 2024-09-24T08:45:05

| 2025-01-14T09:48:23

| null |

NONE

|

{

"completed": 0,

"percent_completed": 0,

"total": 0

}

| null |

### Describe the bug

I am trying to download the `librispeech_asr` `clean` dataset, which results in a `FSTimeoutError` exception after downloading around 61% of the data.

### Steps to reproduce the bug

```

import datasets

datasets.load_dataset("librispeech_asr", "clean")

```

The output is as follows:

> Downloading data: 61%|██████████████▋ | 3.92G/6.39G [05:00<03:06, 13.2MB/s]Traceback (most recent call last):

> File "/Users/Timon/Documents/iEEG_deeplearning/wav2vec_pretrain/.venv/lib/python3.12/site-packages/fsspec/asyn.py", line 56, in _runner

> result[0] = await coro

> ^^^^^^^^^^

> File "/Users/Timon/Documents/iEEG_deeplearning/wav2vec_pretrain/.venv/lib/python3.12/site-packages/fsspec/implementations/http.py", line 262, in _get_file

> chunk = await r.content.read(chunk_size)

> ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

> File "/Users/Timon/Documents/iEEG_deeplearning/wav2vec_pretrain/.venv/lib/python3.12/site-packages/aiohttp/streams.py", line 393, in read

> await self._wait("read")

> File "/Users/Timon/Documents/iEEG_deeplearning/wav2vec_pretrain/.venv/lib/python3.12/site-packages/aiohttp/streams.py", line 311, in _wait

> with self._timer:

> ^^^^^^^^^^^

> File "/Users/Timon/Documents/iEEG_deeplearning/wav2vec_pretrain/.venv/lib/python3.12/site-packages/aiohttp/helpers.py", line 713, in __exit__

> raise asyncio.TimeoutError from None

> TimeoutError

>

> The above exception was the direct cause of the following exception:

>

> Traceback (most recent call last):

> File "/Users/Timon/Documents/iEEG_deeplearning/wav2vec_pretrain/load_dataset.py", line 3, in <module>

> datasets.load_dataset("librispeech_asr", "clean")

> File "/Users/Timon/Documents/iEEG_deeplearning/wav2vec_pretrain/.venv/lib/python3.12/site-packages/datasets/load.py", line 2096, in load_dataset

> builder_instance.download_and_prepare(

> File "/Users/Timon/Documents/iEEG_deeplearning/wav2vec_pretrain/.venv/lib/python3.12/site-packages/datasets/builder.py", line 924, in download_and_prepare

> self._download_and_prepare(

> File "/Users/Timon/Documents/iEEG_deeplearning/wav2vec_pretrain/.venv/lib/python3.12/site-packages/datasets/builder.py", line 1647, in _download_and_prepare

> super()._download_and_prepare(

> File "/Users/Timon/Documents/iEEG_deeplearning/wav2vec_pretrain/.venv/lib/python3.12/site-packages/datasets/builder.py", line 977, in _download_and_prepare

> split_generators = self._split_generators(dl_manager, **split_generators_kwargs)

> ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

> File "/Users/Timon/.cache/huggingface/modules/datasets_modules/datasets/librispeech_asr/2712a8f82f0d20807a56faadcd08734f9bdd24c850bb118ba21ff33ebff0432f/librispeech_asr.py", line 115, in _split_generators

> archive_path = dl_manager.download(_DL_URLS[self.config.name])

> ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

> File "/Users/Timon/Documents/iEEG_deeplearning/wav2vec_pretrain/.venv/lib/python3.12/site-packages/datasets/download/download_manager.py", line 159, in download

> downloaded_path_or_paths = map_nested(

> ^^^^^^^^^^^

> File "/Users/Timon/Documents/iEEG_deeplearning/wav2vec_pretrain/.venv/lib/python3.12/site-packages/datasets/utils/py_utils.py", line 512, in map_nested

> _single_map_nested((function, obj, batched, batch_size, types, None, True, None))

> File "/Users/Timon/Documents/iEEG_deeplearning/wav2vec_pretrain/.venv/lib/python3.12/site-packages/datasets/utils/py_utils.py", line 380, in _single_map_nested

> return [mapped_item for batch in iter_batched(data_struct, batch_size) for mapped_item in function(batch)]

> ^^^^^^^^^^^^^^^

> File "/Users/Timon/Documents/iEEG_deeplearning/wav2vec_pretrain/.venv/lib/python3.12/site-packages/datasets/download/download_manager.py", line 216, in _download_batched

> self._download_single(url_or_filename, download_config=download_config)

> File "/Users/Timon/Documents/iEEG_deeplearning/wav2vec_pretrain/.venv/lib/python3.12/site-packages/datasets/download/download_manager.py", line 225, in _download_single

> out = cached_path(url_or_filename, download_config=download_config)

> ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

> File "/Users/Timon/Documents/iEEG_deeplearning/wav2vec_pretrain/.venv/lib/python3.12/site-packages/datasets/utils/file_utils.py", line 205, in cached_path

> output_path = get_from_cache(

> ^^^^^^^^^^^^^^^

> File "/Users/Timon/Documents/iEEG_deeplearning/wav2vec_pretrain/.venv/lib/python3.12/site-packages/datasets/utils/file_utils.py", line 415, in get_from_cache

> fsspec_get(url, temp_file, storage_options=storage_options, desc=download_desc, disable_tqdm=disable_tqdm)

> File "/Users/Timon/Documents/iEEG_deeplearning/wav2vec_pretrain/.venv/lib/python3.12/site-packages/datasets/utils/file_utils.py", line 334, in fsspec_get

> fs.get_file(path, temp_file.name, callback=callback)

> File "/Users/Timon/Documents/iEEG_deeplearning/wav2vec_pretrain/.venv/lib/python3.12/site-packages/fsspec/asyn.py", line 118, in wrapper

> return sync(self.loop, func, *args, **kwargs)

> ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

> File "/Users/Timon/Documents/iEEG_deeplearning/wav2vec_pretrain/.venv/lib/python3.12/site-packages/fsspec/asyn.py", line 101, in sync

> raise FSTimeoutError from return_result

> fsspec.exceptions.FSTimeoutError

> Downloading data: 61%|██████████████▋ | 3.92G/6.39G [05:00<03:09, 13.0MB/s]

### Expected behavior

Complete the download

### Environment info

Python version 3.12.6

Dependencies:

> dependencies = [

> "accelerate>=0.34.2",

> "datasets[audio]>=3.0.0",

> "ipython>=8.18.1",

> "librosa>=0.10.2.post1",

> "torch>=2.4.1",

> "torchaudio>=2.4.1",

> "transformers>=4.44.2",

> ]

MacOS 14.6.1 (23G93)

| null |

{

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/7164/reactions"

}

|

https://api.github.com/repos/huggingface/datasets/issues/7164/timeline

| null | null | null | null | false

|

https://api.github.com/repos/huggingface/datasets/issues/7163

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/7163/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/7163/comments

|

https://api.github.com/repos/huggingface/datasets/issues/7163/events

|

https://github.com/huggingface/datasets/issues/7163

| 2,542,361,234

|

I_kwDODunzps6XiVqS

| 7,163

|

Set explicit seed in iterable dataset ddp shuffling example

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/5719745?v=4",

"events_url": "https://api.github.com/users/alex-hh/events{/privacy}",

"followers_url": "https://api.github.com/users/alex-hh/followers",

"following_url": "https://api.github.com/users/alex-hh/following{/other_user}",

"gists_url": "https://api.github.com/users/alex-hh/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/alex-hh",

"id": 5719745,

"login": "alex-hh",

"node_id": "MDQ6VXNlcjU3MTk3NDU=",

"organizations_url": "https://api.github.com/users/alex-hh/orgs",

"received_events_url": "https://api.github.com/users/alex-hh/received_events",

"repos_url": "https://api.github.com/users/alex-hh/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/alex-hh/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/alex-hh/subscriptions",

"type": "User",

"url": "https://api.github.com/users/alex-hh",

"user_view_type": "public"

}

|

[] |

closed

| false

| null |

[] | null |

[

"thanks for reporting !"

] | 2024-09-23T11:34:06

| 2024-09-24T14:40:15

| 2024-09-24T14:40:15

|

CONTRIBUTOR

|

{

"completed": 0,

"percent_completed": 0,

"total": 0

}

| null |

### Describe the bug

In the examples section of the iterable dataset docs https://huggingface.co/docs/datasets/en/package_reference/main_classes#datasets.IterableDataset

the ddp example shuffles without seeding

```python

from datasets.distributed import split_dataset_by_node

ids = ds.to_iterable_dataset(num_shards=512)

ids = ids.shuffle(buffer_size=10_000) # will shuffle the shards order and use a shuffle buffer when you start iterating

ids = split_dataset_by_node(ds, world_size=8, rank=0) # will keep only 512 / 8 = 64 shards from the shuffled lists of shards when you start iterating

dataloader = torch.utils.data.DataLoader(ids, num_workers=4) # will assign 64 / 4 = 16 shards from this node's list of shards to each worker when you start iterating

for example in ids:

pass

```

This code would - I think - raise an error due to the lack of an explicit seed:

https://github.com/huggingface/datasets/blob/2eb4edb97e1a6af2ea62738ec58afbd3812fc66e/src/datasets/iterable_dataset.py#L1707-L1711

### Steps to reproduce the bug

Run example code

### Expected behavior

Add explicit seeding to example code

### Environment info

latest datasets

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/42851186?v=4",

"events_url": "https://api.github.com/users/lhoestq/events{/privacy}",

"followers_url": "https://api.github.com/users/lhoestq/followers",

"following_url": "https://api.github.com/users/lhoestq/following{/other_user}",

"gists_url": "https://api.github.com/users/lhoestq/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/lhoestq",

"id": 42851186,

"login": "lhoestq",

"node_id": "MDQ6VXNlcjQyODUxMTg2",

"organizations_url": "https://api.github.com/users/lhoestq/orgs",

"received_events_url": "https://api.github.com/users/lhoestq/received_events",

"repos_url": "https://api.github.com/users/lhoestq/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/lhoestq/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/lhoestq/subscriptions",

"type": "User",

"url": "https://api.github.com/users/lhoestq",

"user_view_type": "public"

}

|

{

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/7163/reactions"

}

|

https://api.github.com/repos/huggingface/datasets/issues/7163/timeline

| null |

completed

| null | null | false

|

https://api.github.com/repos/huggingface/datasets/issues/7161

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/7161/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/7161/comments

|

https://api.github.com/repos/huggingface/datasets/issues/7161/events

|

https://github.com/huggingface/datasets/issues/7161

| 2,541,971,931

|

I_kwDODunzps6Xg2nb

| 7,161

|

JSON lines with empty struct raise ArrowTypeError

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova",

"user_view_type": "public"

}

|

[

{

"color": "d73a4a",

"default": true,

"description": "Something isn't working",

"id": 1935892857,

"name": "bug",

"node_id": "MDU6TGFiZWwxOTM1ODkyODU3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/bug"

}

] |

closed

| false

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova",

"user_view_type": "public"

}

|

[

{

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova",

"user_view_type": "public"

}

] | null |

[] | 2024-09-23T08:48:56

| 2024-09-25T04:43:44

| 2024-09-23T11:30:07

|

MEMBER

|

{

"completed": 0,

"percent_completed": 0,

"total": 0

}

| null |

JSON lines with empty struct raise ArrowTypeError: struct fields don't match or are in the wrong order

See example: https://huggingface.co/datasets/wikimedia/structured-wikipedia/discussions/5

> ArrowTypeError: struct fields don't match or are in the wrong order: Input fields: struct<> output fields: struct<pov_count: int64, update_count: int64, citation_needed_count: int64>

Related to:

- #7159

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova",

"user_view_type": "public"

}

|

{

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/7161/reactions"

}

|

https://api.github.com/repos/huggingface/datasets/issues/7161/timeline

| null |

completed

| null | null | false

|

https://api.github.com/repos/huggingface/datasets/issues/7159

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/7159/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/7159/comments

|

https://api.github.com/repos/huggingface/datasets/issues/7159/events

|

https://github.com/huggingface/datasets/issues/7159

| 2,541,865,613

|

I_kwDODunzps6XgcqN

| 7,159

|

JSON lines with missing struct fields raise TypeError: Couldn't cast array

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova",

"user_view_type": "public"

}

|

[

{

"color": "d73a4a",

"default": true,

"description": "Something isn't working",

"id": 1935892857,

"name": "bug",

"node_id": "MDU6TGFiZWwxOTM1ODkyODU3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/bug"

}

] |

closed

| false

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova",

"user_view_type": "public"

}

|

[

{

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova",

"user_view_type": "public"

}

] | null |

[

"Hello,\r\n\r\nI have still the same issue when loading the dataset with the new version:\r\n[https://huggingface.co/datasets/wikimedia/structured-wikipedia/discussions/5](https://huggingface.co/datasets/wikimedia/structured-wikipedia/discussions/5)\r\n\r\nI have downloaded and unzipped the wikimedia/structured-wikipedia dataset locally but when loading I have the same issue.\r\n\r\n```\r\nimport datasets\r\n\r\ndataset = datasets.load_dataset(\"/gpfsdsdir/dataset/HuggingFace/wikimedia/structured-wikipedia/20240916.fr\")\r\n```\r\n```\r\nTypeError: Couldn't cast array of type\r\nstruct<content_url: string, width: int64, height: int64, alternative_text: string>\r\nto\r\n{'content_url': Value(dtype='string', id=None), 'width': Value(dtype='int64', id=None), 'height': Value(dtype='int64', id=None)}\r\n\r\nThe above exception was the direct cause of the following exception:\r\n```\r\nMy version of datasets is 3.0.1"

] | 2024-09-23T07:57:58

| 2024-10-21T08:07:07

| 2024-09-23T11:09:18

|

MEMBER

|

{

"completed": 0,

"percent_completed": 0,

"total": 0

}

| null |

JSON lines with missing struct fields raise TypeError: Couldn't cast array of type.

See example: https://huggingface.co/datasets/wikimedia/structured-wikipedia/discussions/5

One would expect that the struct missing fields are added with null values.

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova",

"user_view_type": "public"

}

|

{

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/7159/reactions"

}

|

https://api.github.com/repos/huggingface/datasets/issues/7159/timeline

| null |

completed

| null | null | false

|

https://api.github.com/repos/huggingface/datasets/issues/7156

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/7156/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/7156/comments

|

https://api.github.com/repos/huggingface/datasets/issues/7156/events

|

https://github.com/huggingface/datasets/issues/7156

| 2,539,360,617

|

I_kwDODunzps6XW5Fp

| 7,156

|

interleave_datasets resets shuffle state

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/511073?v=4",

"events_url": "https://api.github.com/users/jonathanasdf/events{/privacy}",

"followers_url": "https://api.github.com/users/jonathanasdf/followers",

"following_url": "https://api.github.com/users/jonathanasdf/following{/other_user}",

"gists_url": "https://api.github.com/users/jonathanasdf/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/jonathanasdf",

"id": 511073,

"login": "jonathanasdf",

"node_id": "MDQ6VXNlcjUxMTA3Mw==",

"organizations_url": "https://api.github.com/users/jonathanasdf/orgs",

"received_events_url": "https://api.github.com/users/jonathanasdf/received_events",

"repos_url": "https://api.github.com/users/jonathanasdf/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/jonathanasdf/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/jonathanasdf/subscriptions",

"type": "User",

"url": "https://api.github.com/users/jonathanasdf",

"user_view_type": "public"

}

|

[] |

open

| false

| null |

[] | null |

[

"It also does preserve `split_by_node`, so in the meantime you should call `shuffle` or `split_by_node` AFTER `interleave_datasets` or `concatenate_datasets`"

] | 2024-09-20T17:57:54

| 2024-09-20T17:57:54

| null |

NONE

|

{

"completed": 0,

"percent_completed": 0,

"total": 0

}

| null |

### Describe the bug

```

import datasets

import torch.utils.data

def gen(shards):

yield {"shards": shards}

def main():

dataset = datasets.IterableDataset.from_generator(

gen,

gen_kwargs={'shards': list(range(25))}

)

dataset = dataset.shuffle(buffer_size=1)

dataset = datasets.interleave_datasets(

[dataset, dataset], probabilities=[1, 0], stopping_strategy="all_exhausted"

)

dataloader = torch.utils.data.DataLoader(

dataset,

batch_size=8,

num_workers=8,

)

for i, batch in enumerate(dataloader):

print(batch)

if i >= 10:

break

if __name__ == "__main__":

main()

```

### Steps to reproduce the bug

Run the script, it will output

```

{'shards': [tensor([ 0, 8, 16, 24, 0, 8, 16, 24])]}

{'shards': [tensor([ 1, 9, 17, 1, 9, 17, 1, 9])]}

{'shards': [tensor([ 2, 10, 18, 2, 10, 18, 2, 10])]}

{'shards': [tensor([ 3, 11, 19, 3, 11, 19, 3, 11])]}

{'shards': [tensor([ 4, 12, 20, 4, 12, 20, 4, 12])]}

{'shards': [tensor([ 5, 13, 21, 5, 13, 21, 5, 13])]}

{'shards': [tensor([ 6, 14, 22, 6, 14, 22, 6, 14])]}

{'shards': [tensor([ 7, 15, 23, 7, 15, 23, 7, 15])]}

{'shards': [tensor([ 0, 8, 16, 24, 0, 8, 16, 24])]}

{'shards': [tensor([17, 1, 9, 17, 1, 9, 17, 1])]}

{'shards': [tensor([18, 2, 10, 18, 2, 10, 18, 2])]}

```

### Expected behavior

The shards should be shuffled.

### Environment info

- `datasets` version: 3.0.0

- Platform: Linux-5.15.153.1-microsoft-standard-WSL2-x86_64-with-glibc2.35

- Python version: 3.10.12

- `huggingface_hub` version: 0.25.0

- PyArrow version: 17.0.0

- Pandas version: 2.0.3

- `fsspec` version: 2023.6.0

| null |

{

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/7156/reactions"

}

|

https://api.github.com/repos/huggingface/datasets/issues/7156/timeline

| null | null | null | null | false

|

https://api.github.com/repos/huggingface/datasets/issues/7155

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/7155/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/7155/comments

|

https://api.github.com/repos/huggingface/datasets/issues/7155/events

|

https://github.com/huggingface/datasets/issues/7155

| 2,533,641,870

|

I_kwDODunzps6XBE6O

| 7,155

|

Dataset viewer not working! Failure due to more than 32 splits.

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/81933585?v=4",

"events_url": "https://api.github.com/users/sleepingcat4/events{/privacy}",

"followers_url": "https://api.github.com/users/sleepingcat4/followers",

"following_url": "https://api.github.com/users/sleepingcat4/following{/other_user}",

"gists_url": "https://api.github.com/users/sleepingcat4/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/sleepingcat4",

"id": 81933585,

"login": "sleepingcat4",

"node_id": "MDQ6VXNlcjgxOTMzNTg1",

"organizations_url": "https://api.github.com/users/sleepingcat4/orgs",

"received_events_url": "https://api.github.com/users/sleepingcat4/received_events",

"repos_url": "https://api.github.com/users/sleepingcat4/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/sleepingcat4/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/sleepingcat4/subscriptions",

"type": "User",

"url": "https://api.github.com/users/sleepingcat4",

"user_view_type": "public"

}

|

[] |

closed

| false

| null |

[] | null |

[

"I have fixed it! But I would appreciate a new feature wheere I could iterate over and see what each file looks like. "

] | 2024-09-18T12:43:21

| 2024-09-18T13:20:03

| 2024-09-18T13:20:03

|

NONE

|

{

"completed": 0,

"percent_completed": 0,

"total": 0

}

| null |

Hello guys,

I have a dataset and I didn't know I couldn't upload more than 32 splits. Now, my dataset viewer is not working. I don't have the dataset locally on my node anymore and recreating would take a week. And I have to publish the dataset coming Monday. I read about the practice, how I can resolve it and avoid this issue in the future. But, at the moment I need a hard fix for two of my datasets.

And I don't want to mess or change anything and allow everyone in public to see the dataset and interact with it. Can you please help me?

https://huggingface.co/datasets/laion/Wikipedia-X

https://huggingface.co/datasets/laion/Wikipedia-X-Full

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/81933585?v=4",

"events_url": "https://api.github.com/users/sleepingcat4/events{/privacy}",

"followers_url": "https://api.github.com/users/sleepingcat4/followers",

"following_url": "https://api.github.com/users/sleepingcat4/following{/other_user}",

"gists_url": "https://api.github.com/users/sleepingcat4/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/sleepingcat4",

"id": 81933585,

"login": "sleepingcat4",

"node_id": "MDQ6VXNlcjgxOTMzNTg1",

"organizations_url": "https://api.github.com/users/sleepingcat4/orgs",

"received_events_url": "https://api.github.com/users/sleepingcat4/received_events",

"repos_url": "https://api.github.com/users/sleepingcat4/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/sleepingcat4/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/sleepingcat4/subscriptions",

"type": "User",

"url": "https://api.github.com/users/sleepingcat4",

"user_view_type": "public"

}

|

{

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/7155/reactions"

}

|

https://api.github.com/repos/huggingface/datasets/issues/7155/timeline

| null |

completed

| null | null | false

|

https://api.github.com/repos/huggingface/datasets/issues/7153

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/7153/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/7153/comments

|

https://api.github.com/repos/huggingface/datasets/issues/7153/events

|

https://github.com/huggingface/datasets/issues/7153

| 2,532,788,555

|

I_kwDODunzps6W90lL

| 7,153

|

Support data files with .ndjson extension

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova",

"user_view_type": "public"

}

|

[

{

"color": "a2eeef",

"default": true,

"description": "New feature or request",

"id": 1935892871,

"name": "enhancement",

"node_id": "MDU6TGFiZWwxOTM1ODkyODcx",

"url": "https://api.github.com/repos/huggingface/datasets/labels/enhancement"

}

] |

closed

| false

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova",

"user_view_type": "public"

}

|

[

{

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova",

"user_view_type": "public"

}

] | null |

[] | 2024-09-18T05:54:45

| 2024-09-19T11:25:15

| 2024-09-19T11:25:15

|

MEMBER

|

{

"completed": 0,

"percent_completed": 0,

"total": 0

}

| null |

### Feature request

Support data files with `.ndjson` extension.

### Motivation

We already support data files with `.jsonl` extension.

### Your contribution

I am opening a PR.

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova",

"user_view_type": "public"

}

|

{

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/7153/reactions"

}

|

https://api.github.com/repos/huggingface/datasets/issues/7153/timeline

| null |

completed

| null | null | false

|

https://api.github.com/repos/huggingface/datasets/issues/7150

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/7150/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/7150/comments

|

https://api.github.com/repos/huggingface/datasets/issues/7150/events

|

https://github.com/huggingface/datasets/issues/7150

| 2,527,571,175

|

I_kwDODunzps6Wp6zn

| 7,150

|

WebDataset loader splits keys differently than WebDataset library

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova",

"user_view_type": "public"

}

|

[

{

"color": "d73a4a",

"default": true,

"description": "Something isn't working",

"id": 1935892857,

"name": "bug",

"node_id": "MDU6TGFiZWwxOTM1ODkyODU3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/bug"

}

] |

closed

| false

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova",

"user_view_type": "public"

}

|

[

{

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova",

"user_view_type": "public"

}

] | null |

[] | 2024-09-16T06:02:47

| 2024-09-16T15:26:35

| 2024-09-16T15:26:35

|

MEMBER

|

{

"completed": 0,

"percent_completed": 0,

"total": 0

}

| null |

As reported by @ragavsachdeva (see discussion here: https://github.com/huggingface/datasets/pull/7144#issuecomment-2348307792), our webdataset loader is not aligned with the `webdataset` library when splitting keys from filenames.

For example, we get a different key splitting for filename `/some/path/22.0/1.1.png`:

- datasets library: `/some/path/22` and `0/1.1.png`

- webdataset library: `/some/path/22.0/1`, `1.png`

```python

import webdataset as wds

wds.tariterators.base_plus_ext("/some/path/22.0/1.1.png")

# ('/some/path/22.0/1', '1.png')

```

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova",

"user_view_type": "public"

}

|

{

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/7150/reactions"

}

|

https://api.github.com/repos/huggingface/datasets/issues/7150/timeline

| null |

completed

| null | null | false

|

https://api.github.com/repos/huggingface/datasets/issues/7149

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/7149/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/7149/comments

|

https://api.github.com/repos/huggingface/datasets/issues/7149/events

|

https://github.com/huggingface/datasets/issues/7149

| 2,524,497,448

|

I_kwDODunzps6WeMYo

| 7,149

|

Datasets Unknown Keyword Argument Error - task_templates

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/51288316?v=4",

"events_url": "https://api.github.com/users/varungupta31/events{/privacy}",

"followers_url": "https://api.github.com/users/varungupta31/followers",

"following_url": "https://api.github.com/users/varungupta31/following{/other_user}",

"gists_url": "https://api.github.com/users/varungupta31/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/varungupta31",

"id": 51288316,

"login": "varungupta31",

"node_id": "MDQ6VXNlcjUxMjg4MzE2",

"organizations_url": "https://api.github.com/users/varungupta31/orgs",

"received_events_url": "https://api.github.com/users/varungupta31/received_events",

"repos_url": "https://api.github.com/users/varungupta31/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/varungupta31/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/varungupta31/subscriptions",

"type": "User",

"url": "https://api.github.com/users/varungupta31",

"user_view_type": "public"

}

|

[] |

closed

| false

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova",

"user_view_type": "public"

}

|

[

{

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova",

"user_view_type": "public"

}

] | null |

[

"Thanks, for reporting.\r\n\r\nWe have been fixing most Hub datasets to remove the deprecated (and now non-supported) task templates, but we missed the \"facebook/winoground\".\r\n\r\nIt is fixed now: https://huggingface.co/datasets/facebook/winoground/discussions/8\r\n\r\n",

"Hello @albertvillanova \r\n\r\nI got the same error while loading this dataset: https://huggingface.co/datasets/alaleye/aloresb...\r\n\r\nHow can I fix it ? \r\nThanks",

"I am getting the same error on the below code, any fix to this ?\n\n```\nfrom datasets import load_dataset\n\nminds = load_dataset(\"PolyAI/minds14\", name=\"en-AU\", split=\"train\")\nminds\n```"

] | 2024-09-13T10:30:57

| 2025-03-06T07:11:55

| 2024-09-13T14:10:48

|

NONE

|

{

"completed": 0,

"percent_completed": 0,

"total": 0

}

| null |

### Describe the bug

Issue

```python

from datasets import load_dataset

examples = load_dataset('facebook/winoground', use_auth_token=<YOUR USER ACCESS TOKEN>)

```

Gives error

```

TypeError: DatasetInfo.__init__() got an unexpected keyword argument 'task_templates'

```

A simple downgrade to lower `datasets v 2.21.0` solves it.

### Steps to reproduce the bug

1. `pip install datsets`

2.

```python

from datasets import load_dataset

examples = load_dataset('facebook/winoground', use_auth_token=<YOUR USER ACCESS TOKEN>)

```

### Expected behavior

Should load the dataset correctly.

### Environment info

- Datasets version `3.0.0`

- `transformers` version: 4.45.0.dev0

- Platform: Linux-6.8.0-40-generic-x86_64-with-glibc2.35

- Python version: 3.12.4

- Huggingface_hub version: 0.24.6

- Safetensors version: 0.4.5

- Accelerate version: 0.35.0.dev0

- Accelerate config: not found

- PyTorch version (GPU?): 2.4.1+cu121 (True)

- Tensorflow version (GPU?): not installed (NA)

- Flax version (CPU?/GPU?/TPU?): not installed (NA)

- Jax version: not installed

- JaxLib version: not installed

- Using GPU in script?: Yes

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova",

"user_view_type": "public"

}

|

{

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/7149/reactions"

}

|

https://api.github.com/repos/huggingface/datasets/issues/7149/timeline

| null |

completed

| null | null | false

|

https://api.github.com/repos/huggingface/datasets/issues/7148

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/7148/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/7148/comments

|

https://api.github.com/repos/huggingface/datasets/issues/7148/events

|

https://github.com/huggingface/datasets/issues/7148

| 2,523,833,413

|

I_kwDODunzps6WbqRF

| 7,148

|

Bug: Error when downloading mteb/mtop_domain

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/77958037?v=4",

"events_url": "https://api.github.com/users/ZiyiXia/events{/privacy}",

"followers_url": "https://api.github.com/users/ZiyiXia/followers",

"following_url": "https://api.github.com/users/ZiyiXia/following{/other_user}",

"gists_url": "https://api.github.com/users/ZiyiXia/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/ZiyiXia",

"id": 77958037,

"login": "ZiyiXia",

"node_id": "MDQ6VXNlcjc3OTU4MDM3",

"organizations_url": "https://api.github.com/users/ZiyiXia/orgs",

"received_events_url": "https://api.github.com/users/ZiyiXia/received_events",

"repos_url": "https://api.github.com/users/ZiyiXia/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/ZiyiXia/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/ZiyiXia/subscriptions",

"type": "User",

"url": "https://api.github.com/users/ZiyiXia",

"user_view_type": "public"

}

|

[] |

closed

| false

| null |

[] | null |

[

"Could you please try with `force_redownload` instead?\r\nEDIT:\r\n```python\r\ndata = load_dataset(\"mteb/mtop_domain\", \"en\", download_mode=\"force_redownload\")\r\n```",

"Seems the error is still there",

"I am not able to reproduce the issue:\r\n```python\r\nIn [1]: from datasets import load_dataset\r\n\r\nIn [2]: data = load_dataset(\"mteb/mtop_domain\", \"en\")\r\n\r\nIn [3]: data\r\nOut[3]: DatasetDict({\r\n train: Dataset({\r\n features: ['id', 'text', 'label', 'label_text'],\r\n num_rows: 15667\r\n })\r\n validation: Dataset({\r\n features: ['id', 'text', 'label', 'label_text'],\r\n num_rows: 2235\r\n })\r\n test: Dataset({\r\n features: ['id', 'text', 'label', 'label_text'],\r\n num_rows: 4386\r\n })\r\n})\r\n```",

"Just solved this by reinstall Huggingface Hub and datasets. Thanks for your help!"

] | 2024-09-13T04:09:39

| 2024-09-14T15:11:35

| 2024-09-14T15:11:35

|

NONE

|

{

"completed": 0,

"percent_completed": 0,

"total": 0

}

| null |

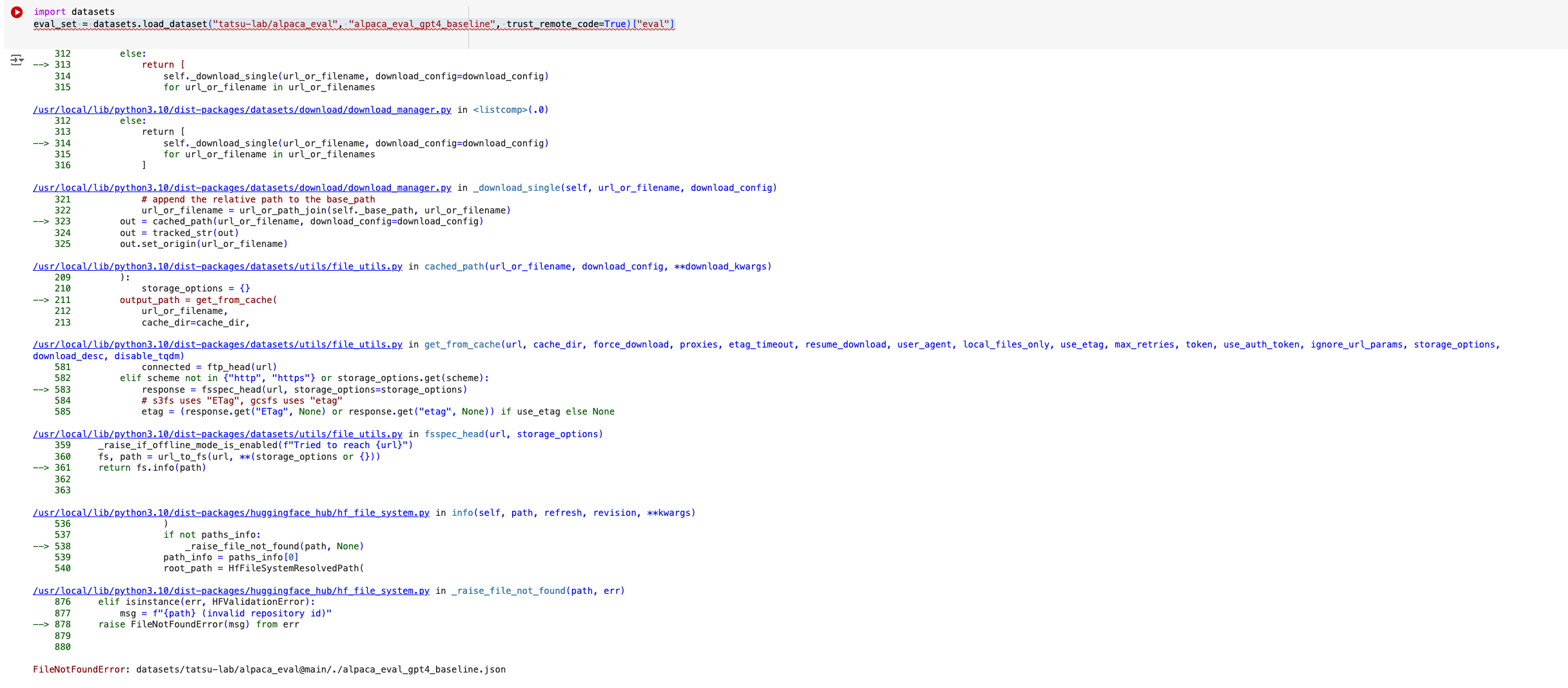

### Describe the bug

When downloading the dataset "mteb/mtop_domain", ran into the following error:

```

Traceback (most recent call last):

File "/share/project/xzy/test/test_download.py", line 3, in <module>

data = load_dataset("mteb/mtop_domain", "en", trust_remote_code=True)

File "/opt/conda/lib/python3.10/site-packages/datasets/load.py", line 2606, in load_dataset

builder_instance = load_dataset_builder(

File "/opt/conda/lib/python3.10/site-packages/datasets/load.py", line 2277, in load_dataset_builder

dataset_module = dataset_module_factory(

File "/opt/conda/lib/python3.10/site-packages/datasets/load.py", line 1923, in dataset_module_factory

raise e1 from None

File "/opt/conda/lib/python3.10/site-packages/datasets/load.py", line 1896, in dataset_module_factory

).get_module()

File "/opt/conda/lib/python3.10/site-packages/datasets/load.py", line 1507, in get_module

local_path = self.download_loading_script()

File "/opt/conda/lib/python3.10/site-packages/datasets/load.py", line 1467, in download_loading_script

return cached_path(file_path, download_config=download_config)

File "/opt/conda/lib/python3.10/site-packages/datasets/utils/file_utils.py", line 211, in cached_path

output_path = get_from_cache(

File "/opt/conda/lib/python3.10/site-packages/datasets/utils/file_utils.py", line 689, in get_from_cache

fsspec_get(

File "/opt/conda/lib/python3.10/site-packages/datasets/utils/file_utils.py", line 395, in fsspec_get

fs.get_file(path, temp_file.name, callback=callback)

File "/opt/conda/lib/python3.10/site-packages/huggingface_hub/hf_file_system.py", line 648, in get_file

http_get(

File "/opt/conda/lib/python3.10/site-packages/huggingface_hub/file_download.py", line 578, in http_get

raise EnvironmentError(

OSError: Consistency check failed: file should be of size 2191 but has size 2190 ((…)ets/mteb/mtop_domain@main/mtop_domain.py).

We are sorry for the inconvenience. Please retry with `force_download=True`.

If the issue persists, please let us know by opening an issue on https://github.com/huggingface/huggingface_hub.

```

Try to download through HF datasets directly but got the same error as above.

```python

from datasets import load_dataset

data = load_dataset("mteb/mtop_domain", "en")

```

### Steps to reproduce the bug

```python

from datasets import load_dataset

data = load_dataset("mteb/mtop_domain", "en", force_download=True)

```

With and without `force_download=True` both ran into the same error.

### Expected behavior

Should download the dataset successfully.

### Environment info

- datasets version: 2.21.0

- huggingface-hub version: 0.24.6

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova",

"user_view_type": "public"

}

|

{

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/7148/reactions"

}

|

https://api.github.com/repos/huggingface/datasets/issues/7148/timeline

| null |

completed

| null | null | false

|

https://api.github.com/repos/huggingface/datasets/issues/7147

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/7147/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/7147/comments

|

https://api.github.com/repos/huggingface/datasets/issues/7147/events

|

https://github.com/huggingface/datasets/issues/7147

| 2,523,129,465

|

I_kwDODunzps6WY-Z5

| 7,147

|

IterableDataset strange deadlock

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/511073?v=4",

"events_url": "https://api.github.com/users/jonathanasdf/events{/privacy}",

"followers_url": "https://api.github.com/users/jonathanasdf/followers",

"following_url": "https://api.github.com/users/jonathanasdf/following{/other_user}",

"gists_url": "https://api.github.com/users/jonathanasdf/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/jonathanasdf",

"id": 511073,

"login": "jonathanasdf",

"node_id": "MDQ6VXNlcjUxMTA3Mw==",

"organizations_url": "https://api.github.com/users/jonathanasdf/orgs",

"received_events_url": "https://api.github.com/users/jonathanasdf/received_events",

"repos_url": "https://api.github.com/users/jonathanasdf/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/jonathanasdf/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/jonathanasdf/subscriptions",

"type": "User",

"url": "https://api.github.com/users/jonathanasdf",

"user_view_type": "public"

}

|

[] |

closed

| false

| null |

[] | null |

[